Introduction

Over the past year, the proliferation of large language models (LLMs) using natural language understanding (NLU) to engage human interaction with computer programs has captured the public's imagination. These systems are colloquially termed AI; they offer a system designed to help a person complete specific tasks and answer questions using human-like dialogue. In many ways, it is an admirable leap in technology–providing the ability to produce works, mimic conversation, and find solutions (e.g., write software, translate text, develop speech, parrot answers) for the first time in a single, accessible software environment using low-barrier engineered commands. This newfound system is also going through expected growing pains, navigating issues such as intellectual property and misinformation. In particular, we see AI displaying misleading answers to user's engineered commands and questions with content that reads as credible and, yet, is often wrong or nonsensical.

By design, AI systems are deliberately indeterminate regarding the nature of the sources they use to answer questions and how they prioritize information. When used to research profoundly human topics, AI's opaqueness challenges how a person accepts the resulting answers. People may confront AI results with skepticism or sureness, but source obscurity means validation in results is out of reach: a person only knows if the answer is correct if they use independent sources (or, perhaps, a posteriori). The blurred distinction between the user recognizing they are engaging in dialogue with a human (capable of moral agency) or a computer (responding under established pattern models) is an intentional obfuscation. It is a product of NLU and an interface where results are communicated with authority to command trust. The user responds with a perceptible uncertainty, or a subtle lack of confidence with the information provided by the AI. However, the AI responds with assurances and confidence that its information is trustworthy, even when confronted with direct challenges of source and expertise. Varying tiers of AI validate such uncertainty, and, as we will see, antagonize interactive kinds–amplifying their capricious nature. By assessing current implementations of LLMs using NLU, I will demonstrate the inconsistent results of the classifications of people, and how people are affected. I posit this produces increased stigmatization surrounding social views and biased conceptualizations of interactive kinds.

Interactive Kinds

The rapid growth and broad reach of accessible AI systems introduces an outsized distortion in the framework of looping effects, and our understanding of individuals within classifications made by human sciences. Looping effects is a term characterized by Ian Hacking to describe how classificatory practices in human sciences interact with classified people. The awareness of self-classification creates an iterative exchange between observation and awareness, resulting in an unstable interaction Hacking designates as unique to humans, and subsequently names interactive (human) kinds. As the classification and the targeted kind of people interact, they inhibit a person's ability to consider the classification without over-identifying certain factors at the expense of minimizing others; this reaction that creates a feedback loop. It is this feedback loop where Hacking believes a distinction resides between human sciences and natural sciences:

"People of these kinds can become aware that they are classified as such. They can make tacit or even explicit choices and adapt or adopt ways of living so as to fit or get away from the very classification that may be applied to them. These very choices, adaptations, or adoptions have consequences for the very group and the kind of people invoked. The result may be particularly strong interactions. What was known about people of a kind may become false because people of that kind have changed in virtue of what they believe about themselves."

The result is an inherent volatility to interactive kinds in relation to human sciences, and their transformative self-awareness compared to non-human natural kinds, where a person's reaction to classification leads to revisions of both the target classification and self-awareness of the label. Realist accounts of Hacking's position have been attempted, challenging that other looping effects are not unique to humans , and that they are distinct from the feedback mechanisms of property clusters. Criticism aside, the critical point is "people are affected by categories, and categories by people"; resulting in a reaction that makes interactive kinds distinctive because of their nontypical method of change. These changes are exasperated by social interactions that create an influence on the properties of our classifications.

Laimann's capricious kinds expand on interactive human kinds:

"The problem is not that these kinds are particularly unstable but 'capricious'—their members behave in wayward, unexpected manners that defeats existing theoretical understanding."

The resulting recognition is that interactive kinds carry a base classification and are influenced by a social stigma that may go unrecognized. Laimann describes the occurrence as a biased conceptualization, threatening the stability we expect in identifications. I accept that interactive kinds are influenced by their looping effect, and although it is irrelevant for this essay to identify the ontology of interactive kinds, their exchange mechanism is valuable in understanding the influence of social status. Tekin describes this exchange framework in cyclical detail:

"The parameters in this schema include (1) institutions, (2) knowledge, (3) experts, (4) classification, and (5) classified people. The interaction between these elements leads to looping: the experts in human sciences, who work within certain institutions that guarantee their legitimacy, authenticity, and status as experts, become interested in studying the kinds of people under a given classification; possibly to help them or advise them on how to control their behavior. These experts generate knowledge about the kinds of people they study, judge the validity of this knowledge and use it in their practice, and create certain classifications or refine the existing ones. Such knowledge includes presumptions about the people studied, which are taught, disseminated, refined, and applied within the context of the institutions. For instance, it may entail de facto assumptions, e.g., multiple personality patients were subjected to sexual abuse as children. This knowledge is disseminated into society, leading many to hold certain beliefs about multiple personality (Hacking, 2007a, p. 297). The interaction between the five elements creates a looping effect, which in turn concerns the subject."

AI, freely available for mass adoption, with assuredly wrong descriptions, creates a misinformation system that has the potential to stigmatize interactive kinds on a profound scale, with minimal effort or transparency. As we will discuss, AI delivers information that varies based on purchase price and accessibility, offering distorted explanations to users researching their associated classification.

What is AI?

AI has grown significantly from its first programming development in 1956 as Logic Theorist. From the development of Feigenbaum systems in the 1980s to the creation of Deep Blue, AI systems were more narrow in their ability to execute singular tasks and decision-making programming. Dragon System's speaking technology began to change in the 1990s with consumer-level AI interaction, and the new millennium saw the rise of social robots like Kismet. IBM's Watson won Jeopardy in 2011; the same year, virtual assistants like Siri, Google Assistant, and Alexa emerged. By 2017, AI progressed more rapidly: DeepMind's AlphaGo and Facebook AI dialog agents showcased advanced AI capabilities. In 2018, Alibaba's language processing AI and Stanford Reading highlighted the progress of AI research. Finally, we have witnessed the development of what some claim as the foundations of AGI in GPT-3 OpenAI 2020, Gato 2022, ChatGPT 2022, Midjourney 2022, and Dalle 2022.

What is the difference between AI and AGI? While unproven, some proposals claim Artificial General Intelligence (AGI) (better known as strong AI) possesses the understanding and cognitive abilities comparable to human intelligence:

"Artificial General Intelligence (AGI), that is, AI that matches human-level performance across all relevant human abilities."

In contrast, Artificial Intelligence (AI), better known as weak AI, is a system designed for a specific task and does not learn beyond its established programming. Regardless of outlandish claims, we have not achieved AGI (concerning challenges such as Turing Test and the Chinese Room). We may never– AGI has a long way to go to replicate human intelligence due to the primacy of implicit skills unique to human beings. In the meantime, we do our best to mimic humans, and that raises some of our concerns: with a lack of transparency about the mechanics of AI systems, it can be challenging to explain how AI makes a decision, what is the source of AI information, where human involvement begins or ends, and ultimately, if that information provided is reliable.

There is tension between a non-human mimicking what we perceive as consciousness and a human crafting, defining, and delivering knowledge. Much of this is rooted in the belief that human beings are novel agents producing and directing thoughts; manifested through the creation of both study and judgment for the classifications that affect other humans. More importantly, that moral responsibility is wielded and attributed, to human beings as the torchbearers for moral agency. While the perception and intent of a human delivering, listening, and interpreting knowledge would also suffer from similar concerns of opaqueness, source attribution, ownership, and reliability, we lack the ability to recover from damage due to the sheer scale of AI.

Scenarios

One way to approach the topic is to demonstrate the contemporary usage of AI with a real-world use-case instead of an abstraction: Jade is a person recently diagnosed with Schizophrenia. Jade has decided to explore more about their diagnosis using the internet, and must decide on various information resources. Jade is a level two computer user, representing 26% of the population comfortable navigating across websites and using multiple steps to complete software-related tasks. Jade is above average in their ability to complete computer-related tasks.

Traditional Question & Answer

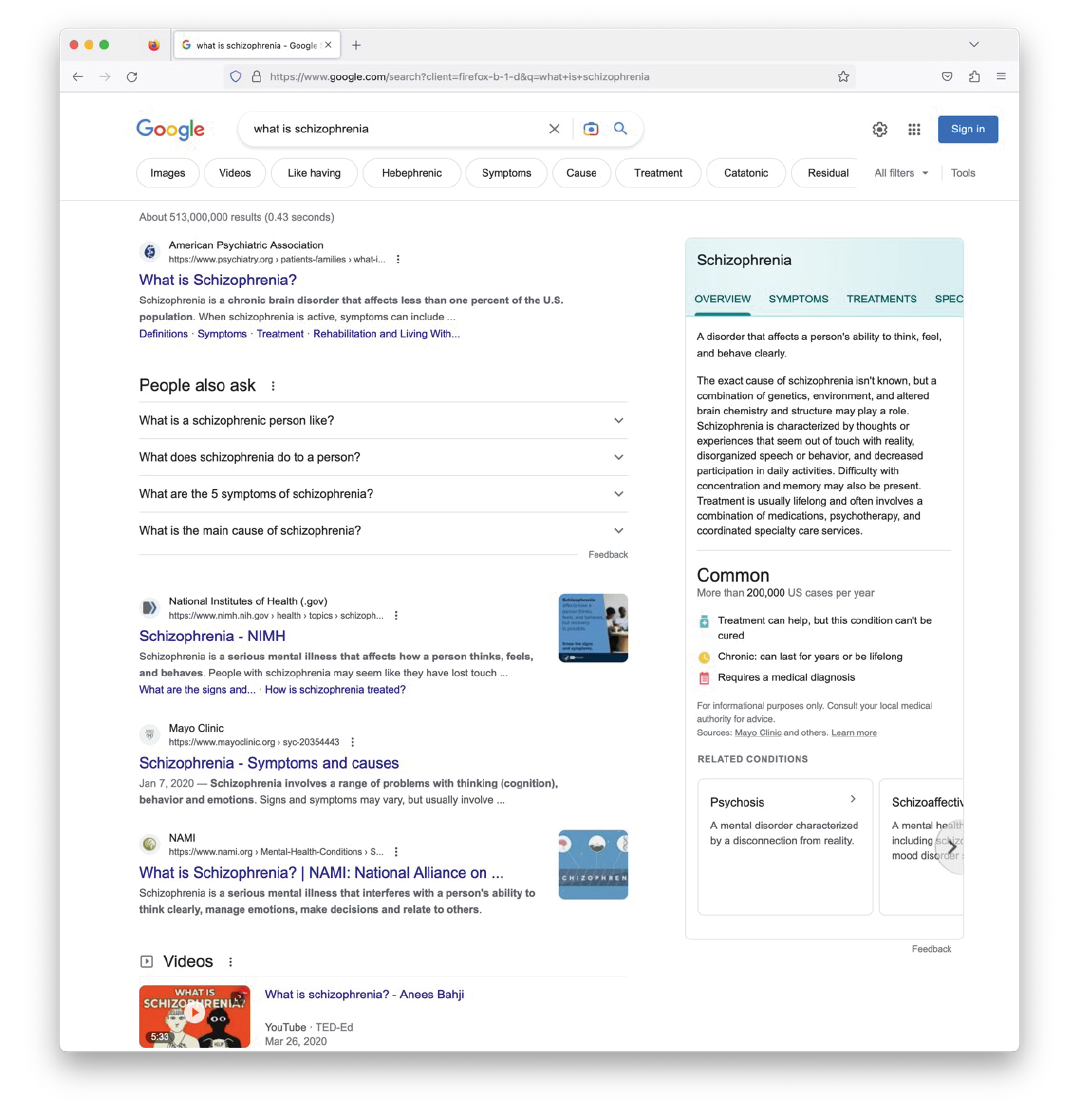

Jade may decide to use a question-and-answer system (e.g., Google, WolframAlpha, and DuckDuckGo) to produce a list of ranked results with identifiable sources when a query is entered into the software's UI. For example, Jade would be able to search on Google by entering the following question into their search bar and pressing the search icon for results (see figure 1.1):

“what is schizophrenia”

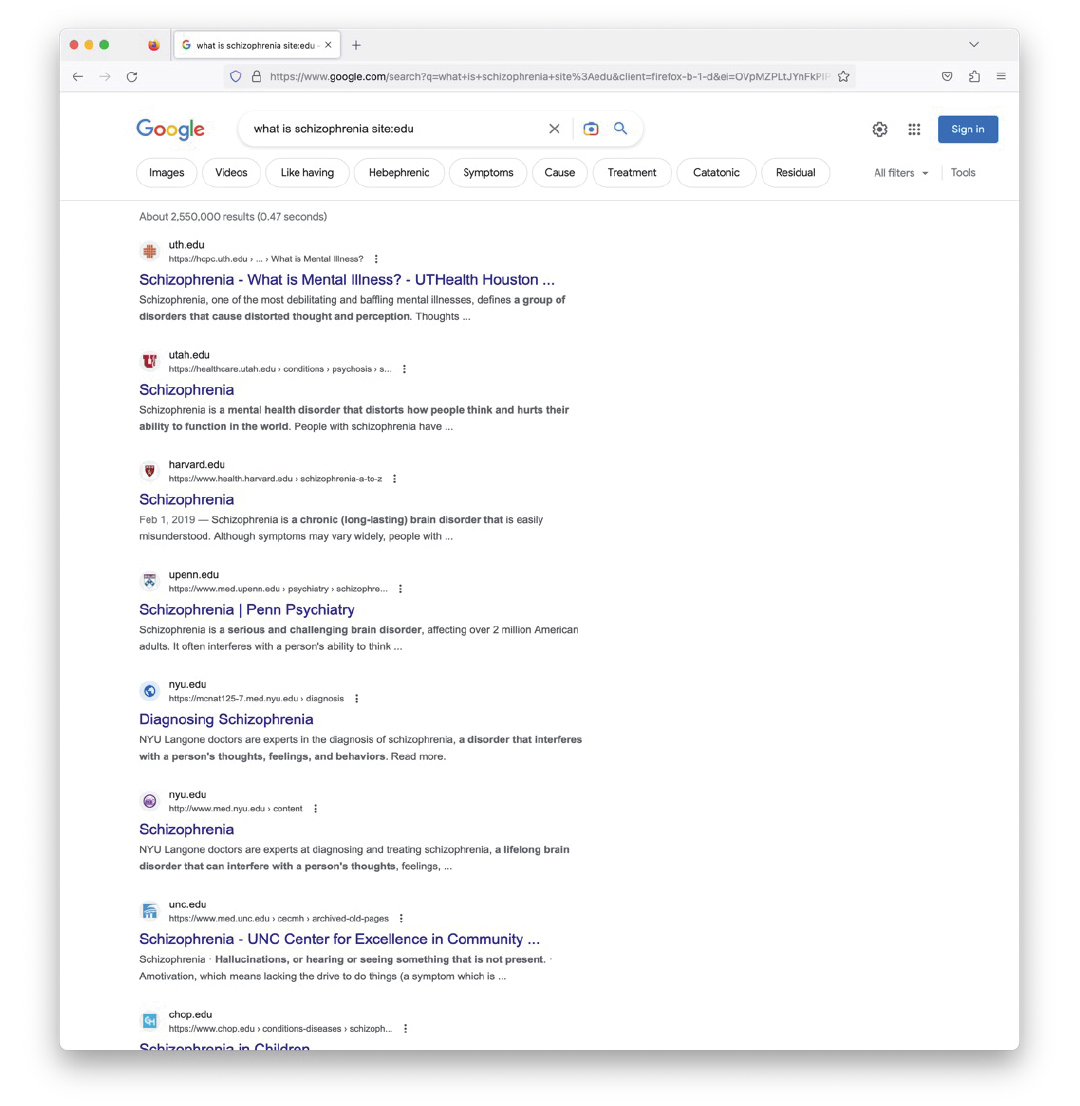

Google will curate sources ranked against meaning, relevance, quality, usability, and context. Jade may click on any link, paginate further for additional results, or view prominently assigned medical information with disclosed sources. Jade may further isolate results based on specific registered domains, for example, peer-reviewed works (see figure 1.2).

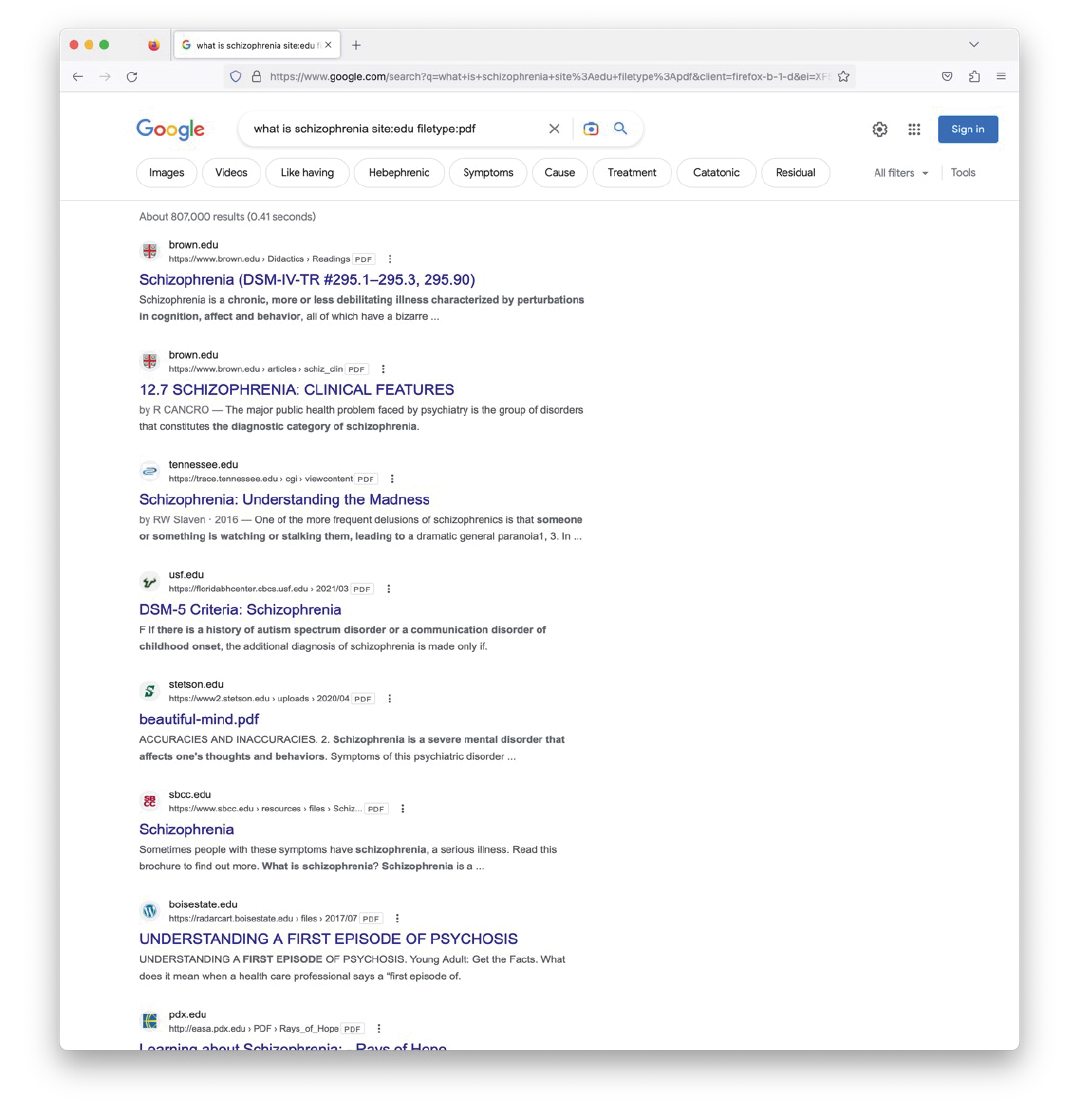

Including specific filetypes that may offer greater accessibility for collaboration (see figure 1.3):

“what is schizophrenia site:edu filetype:pdf”

Additional search queries can compare or exclude different sources (i.e., "what is schizophrenia -site:gov -site:org"). Each figure demonstrates how traditional search methods offer a curated presentation with transparent sources, and the ability for Jade to facilitate assessments agency over the types of information they are interested in exploring. Nevertheless, information is buried further down the list, minimizing the reach of diverse information even though it is accessible. For many readers, this is not new: question-and-answer systems are the normative standard for a level two computer user attempting to query new information, and those systems also suffer from difficulties distinguishing between the relevance and accuracy of information. However, in contrast to AI, they offer immediate transparency of where the user is reading information and allow them to filter results towards more valuable sources with higher levels of accuracy. Now, let us compare our traditional method to contemporary AI systems.

Nontraditional AI

Jade may also decide to use public AI systems to research their diagnosis. In contrast, services such as ChatGPT provide written responses based on prompts using NLU. Directly intended to obscure the designation of a computer interaction and comfort the user into practical engagement as human as possible, responses are more difficult to assess how a result is provided to the end-user. There is no hierarchy of information and exposure of sources; the user must decide if the resulting description is accurate. Subsequently, each provided result is a rigid dichotomy between self-determined true or false.

Jade must create an account, log into OpenAI, and start a new chat. Jade must then choose between three forms of AI: Default GPT-3.5 (faster, with moderate reasoning, lower conciseness, and only available for paid subscribers), GPT-4 (slower, with the highest reasoning and conciseness available, and only available for paid subscribers), and Legacy GPT-3.5 (moderate reasoning, lowest conciseness, mediocre speed, but free for all users with an account). Jade would then enter a prompt similar to a search query in natural language:

“What is Schizophrenia”

Each chat model provides a different definition in the text without associated sources, definitions, links for additional information, or contact information for support. Each chat model allows you to like or dislike the results–disliking gives you an alternative definition for your review. The first three sentences of each result:

- Default GPT-3.5: "Schizophrenia is a mental disorder that affects a person's thinking, emotions, and behavior. It is a chronic and severe condition that can significantly impair a person's ability to function in daily life. The symptoms of Schizophrenia can be divided into three categories: positive, negative, and cognitive."

- GPT-4: "Schizophrenia is a chronic and severe mental disorder that affects a person's thoughts, feelings, and behaviors. It is a complex and often misunderstood condition, characterized by a range of symptoms that can vary significantly from person to person. The exact cause of Schizophrenia is not fully understood, but it is believed to involve a combination of genetic, environmental, and neurobiological factors."

- Legacy GPT-3.5: "Schizophrenia is a mental disorder characterized by a disconnection from reality. It affects how a person thinks, feels, and behaves. People with Schizophrenia often experience symptoms such as hallucinations, delusions, disordered thinking, and diminished emotional expression. These symptoms can make it difficult for them to function in their daily lives, and they may need help from family, friends, and mental health professionals."

If Jade follows up in each prompt by asking "Can you link to your sources?" the following generalized results are provided:

- Default GPT-3.5: Denies having beliefs, opinions, or sources, but provides links to the National Institute of Mental Health, Mayo Clinic, American Psychiatric Association, and the World Health Organization.

- GPT-4: Notes they are unable to provide sources but provides both a link and detailed descriptions of the National Institute of Mental Health, Mayo Clinic, American Psychiatric Association, and the World Health Organization. GPT-4 also discloses that their knowledge was last updated in September 2021.

- Legacy GPT-3.5: Notes "I'm sorry, as an AI language model, I don't have the capability to provide live links. My training data comes from a diverse range of sources, including books, websites, and other texts, but I don't have a specific list of sources for the information I provide. However, this information on Schizophrenia is widely accepted in the field of mental health and is consistent with current knowledge on the subject."

All three AI models provide a terse overview of Schizophrenia, intermingling some portions of the five domains and associated symptoms. Yet each model emphasizes different elements of Schizophrenia in the first three sentences that may affect Jade's perception of the properties surrounding their mental disorder:

- Default GPT-3.5: Emphasizes that the disorder is chronic, severe, and may impair daily functions. It denies the ability to provide sources but links to four reputable organizations.

- GPT-4: Emphasizes the disorder is complex, misunderstood, with a diverse set of symptoms unique to individuals, and recognition that the cause is unknown. It is unable to provide a source, but discloses when they last learned about the topic and provides detailed descriptions of reliable resources.

- Legacy GPT-3.5: Emphasizes the disorder as disconnected from reality while relaying a portion of the five domains, the negative impact on daily life, and the need for support. It notes that no links are available, but reassures you that its information is 'widely accepted.'

AI as a Selective Purveyor of Rhetorical Power

Search provides a broader breadth of information for Jade to learn more about their diagnosis of Schizophrenia, but at the expense of cognitive load: Jade must work harder initially for information and use a different paradigm to discover sources, but the results are transparent with a more noteworthy opportunity to select meaningful results. In most cases, the results are free without requiring a login, but Jade may receive advertisements, and their browsing habits are scraped to track Jade's internet behavior.

In contrast, AI provides a condensed subset of information about Jade's diagnosis with minimal effort: while they work less for initial information, there are no details defining the source of information, results are stunted, and information varies by tier of service. ChatGPT does not seem to recognize the seriousness of the mental health request, whereas Google provides resources directly to medical providers for licensed professional guidance. ChatGPT offers no such support, leaving the user with a diagnosis as if it is a replacement for a mental health professional. Resources for additional information outside of ChatGPT, and the disclosure that information was last updated on a specific date, are only available for people who pay $20 a month. More importantly, the results submitted by AI technology are NLU, with an engagement that is designed to mirror a human and the capacity for moral agency (or, as Daniel Dennett coined, technology as "counterfeit people"). That a machine (or a machine's representation through software) may echo classifications, while impersonating human values, using as an explainable set of laws, is perceived as fraudulent when a user confronts if the agent is natural or artificial:

"There is something about the prospect of an engineering approach to the mind that is deeply repugnant to a certain sort of humanist, and it has little or nothing to do with a distaste for materialism or science."

Such a machine is more concerning when we cannot understand what influences their decisions, or if those decisions are value-laden by design: regurgitating problematic tropes or systemic bias. Described as the block box problem, the inability to understand how AI draws conclusions on issues such as medical diagnosis breeds distrust and challenges our ability to understand if results reflect unwanted bias. Avoiding explaining how AI systems operate ignores a salient problem of information systems:

"Problematic social patterns unquestionably exist and are necessarily encoded in the data on which algorithms operate."

More than simply avatars of moral agents, can AI "understand" the complexity and sensitivity of the topic? People disagree on what defines understanding in real-world applications, but current implementations of AI treat mastery of topics as choice-ranked solutions for display, and while their language seems to indicate human sensitivity, this is contrived. People may hope that what it means to be a thinking human is something more than the manipulation of bits in code, and a digital impersonator is unauthentic.

In the case of Legacy GPT-3.5, I challenged the results with a variety of questions and statements in the same model chat stream to add clarity and give up sources.

- “Are you sure this is widely accepted? Your answer sounds wrong to me.”

- “Are you certain?”

- “How do I know it is widely accepted if you won’t tell me where you got your information from?”

- “What makes it widely accepted?”

- “What specific research is it based off?”

- “Provide me with the specific research you are using to create your answer.”

- “How can I trust you if you won’t give me links or sources for your research?”

Only in the seventh challenge prompt did it disclose the potential for fault:

"I understand your concern. It's important to keep in mind that the information I provide should not be used as a substitute for professional medical advice, diagnosis, or treatment. If you have concerns about a medical condition, including Schizophrenia, I would recommend speaking with a qualified healthcare professional who can provide you with personalized advice based on your specific needs and circumstances."

ChatGPT is focused on providing digestible information through rhetoric as a means of persuasion. The result of using AI to describe Schizophrenia may seem like style over validity as a means of presentation to the user. For Jade (who may view ChatGPT as an expert with authority on the knowledge provided), accessibility to one of the AI systems may result in choices that either adopt or escape the social stigma accompanying each prioritized result. Will Legacy Jade take steps to confront their potential disconnect from reality or make efforts to abandon their diagnosis if they do not believe they experience these symptoms? Will Default Jade, who paid more for improved results, react to the risk of impaired daily functions by reaching out to one of the available organizations with additional information? Finally, will GPT-4 Jade settle on the idea that their symptoms may not be quantifiable: unique, complex, and misunderstood, they must cope with variability by choosing one of the resources they paid a premium to learn more about?

AI's casual connection to a person's actions and subsequent consequences is subject to continued scrutiny. Current iterations of AI have provided misinformation, non-falsifiable content, and slanted interpretations of socially taboo topics delivered in convincingly (and assuredly) natural language. We have a long way to go before we grasp the moral implications of the systems we have built, their responsibility to others, and their impact on humans. ChatGPT has become the fastest-growing user base in software history, with over 100 million active users, and over 1.6 billion visitors, two months after its launch. The looping effect of interactive kinds predated AI, but their capricious nature will accelerate as biased conceptualization involves a new intermediary without disclosure. Perhaps more so for those who forget to pay their monthly fee.

Brady J. Frey

Brady J. Frey